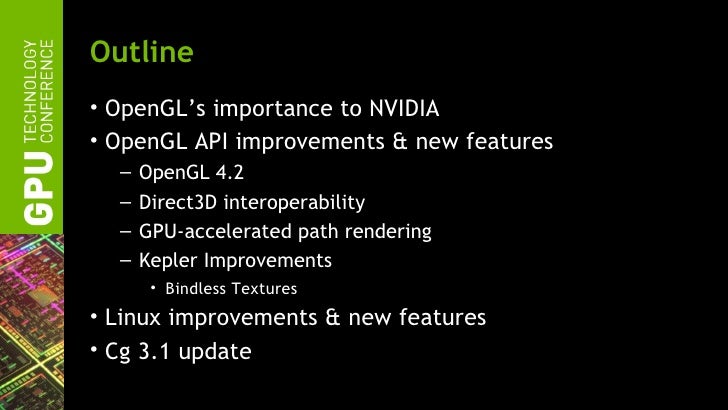

Herunterladen Nvidia Cg Toolkit 3.1 For Mac

Jan 19, 2013 - Install the nVidia CG toolkit for rViz by download their installation package: http://developer.download.nvidia.com/cg/Cg_3.1/Cg-3.1_April2012.

After reading through some more stuff, I think my second post hit it on the head. The main advantage is the high level aspect of things rather than assembly. Looking through some of the testimonials from developers that have been working with Cg, half of them go something like this: 'Right now we have 1 or 2 people that can read and write shaders, but with Cg everyone will be able to work with it' Of course, thats a bit optimistic of a statement.

There still is the high bar of having to understand a lot of the math that goes on. Then again, if one developer write some Cg subroutines for transforming into tangent space and so on, then all that the other developers need to know is that they need to transform into tangent space. The wont need to know how to do it, and they wont need to worry about take the assembly instructions someone else provided and mixing those in with other instructions, and worrying about temp register collision, etc.

Now another thing is that this is Cg 1.0, and I read that they will be shipping Cg 2.0 with the launch of the NV-30. If they are just upgrading it to add an NV-30 profile, thats well and fine. But if they will be adding new features to the language to support the NV-30, then things are entirely wrong and its not likely other IHVs will adopt Cg because it will be too would favor nvidia too much. Basicly, the only way I see it succeeding as an 'industry standard' will be if it is fully featured enough in version 1 to support several years worth of graphics cards. That said, even if it doesnt become industry standard, at least it will ease development for the nvidia side of things, having one language for openGL 1.4/2.0 and DirectX 8/9. One thing I noticed though.I read the specification and it mentions the 'fp20 profile for compiling fragment programs to NV2X’s OpenGL API'.

Then the spec goes on to give detailed descriptions of directx8 vertex shaders, directx8 pixel shaders, and opengl vertex programs. No detailed description for the fragment programs. What happened? Originally posted by LordKronos: In thinking more about it, I am actually starting to realize that perhaps the largest benefit of Cg is that you can write shaders in a high level language. I know that's pretty obvious, and its something that nvidia is pointing out, but I guess it didnt sink in for me because I personally am pretty comfortable with things like assembly language.

Writing shaders in assembly or using register combiners is not a hold up for me. However, for a lot of programmers, it is. I always thought register combiners were perfectly fine, but in speaking to some nvidia guys a few years ago they said their biggest complaint was that a lot of developers were having trouble learning or getting comfortable with combiners. Www shareaza freeware, shareaza for mac. Same thing happens in assembly, a lot of programmers dont get the concept of a limited number of registers. They have X number of registers, but need 5x number of temporary variables. Sharing the registers among their 'variables' just doesnt click with them.

Being able to program in a high level language will let a lot more poeple do it. I thought the main benifit with a high level language is 'speed benifits' eg with cpus interesting to note a lot of the assembly 'tricks' from a couple of years ago actually run slower than the C version written then + compiled today (ie the asm is set in stone the higher level language aint) multiple that by about a factor of 5 (about the rate graphics hardware seems to advancing compared to cpu's ) btw ive said this at least 10x in the last couple of years dont bother learning pixelshaders cause the syntax will be soon superceeded, well heres further proff.

Wait a minute. I dont want that to happen.it makes my skills LESS valuable im right behind u ehor edit- i agree with davepermen (+ others) this being a 'standard' is a bad idea BIG QUESTION how much input from sources outside of nvidia went into the design of this? Also something noones mentioned i dont think ms will be to happen about this wrt d3d, i know relations between ms + nvidia aint been going to good recently for a while (perhaps because of the xbox failure?) to lighten the topic i just madeup a joke Q whats the difference between the xbox + the dodo? A the dodo managed to hold out for a few years ok ok feel free to shoot me (offer only open for 24 hours) This message has been edited by zed (edited ). Edit- i agree with davepermen (+ others) this being a 'standard' is a bad idea Why? Standards are good. Ppl seem to imply that NV is trying to steal ppl away from GL2's shading system.

Cg allows you to create a single 'shader', that compiles down to GL2, GL1.4, DX8, DX9, and across multiple architectures. Simply by chaging the profile at compile time. The original source shader stays the same. Not all hardware is going to able to support GL2 completely.

Herunterladen Nvidia Cg Toolkit 3.1 For Mac Download

Cg allows you to still utilize a single shading language, for current hardware, that will be mainstream for quite a while. Hi I think I will play with it, perhaps I will use it for my game,where I'm writing at the time the base.It would be great if ATI write ASAP a profile so I could rise the minimal graphics card to a GF3/Radeon 8500 without writing mutiple code pathes. As mentioned by others before I'm missing a profile for Register combiners/texture shaders?(I could write a pixel shader and pass it to nvparse, but that is not sense of the exercise). Perhaps they should make the profile system open source so othersnot only IHV's can write a backend for their purpouses.

Herunterladen Nvidia Cg Toolkit 3.1 For Mac Pro

Perhaps it is a little bit silly, but what about writing a fragment backend for ARBtextureenvcombine so you could write a shader that makes TNT2/Rage users happy? Bye ScottManDeath. I've been playing with this new Cg stuff for a while now and all i have to say is GOOD JOB NVIDIA!!

I think it's great. I mean writing the vertex programs in the assembly and stuff wasn't to bad, i did like it a lot.

But heck, now with Cg, i like writing shaders even more. The only thing that interested me in OpenGL 2.0 was how shaders where written (in a C like language), but now with this, i could care less about OpenGL 2.0. Cg will work from a GF 3 on up RIGHT NOW, and hopefully on other cards (like ATI and stuff) like which is what i think they were also trying to get at with this. It looks like some here are not as excited about it as i am, but i guess you cant please everyone. I am actually suprised some has said negative things about it, this is a pretty darn powerful thing here. Once the next gen cards come out that have a more powerfull programable GPU, this Cg language will be even more awesome.